Edge Computing Vs Artificial Intelligence

- Khirod Behera

- Sep 29, 2020

- 5 min read

Table of Contents:-

Intro to Edge computing

Why Edge Computing come to the market

Definitions Of Edge Computing

Edge computing in AI

How Edge computing helps in AI

How AI helps to Edge

Why Edge-AI is important

Why we should use Edge for AI

Implementation in Edge-AI

Conclusion in my point of view.

Edge is buzzword like IOT and AI. Edge means a layer or a limit.

In the earlier when there was no cloud computing there we need to do all the operation in a linux machine that was our server and after that we started to use cloud computing which is very much efficient and very much powerful also, But now, there is very less to improve in cloud computing and that makes edge computing to came into market.

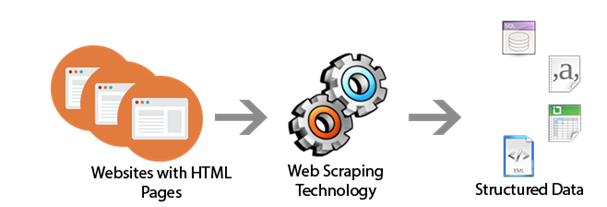

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the location of the device. Edge computing originated from content delivery networks. Now, companies use virtualization to extend the capabilities for that edge computing can be a very good option for this.

Definition: -

--> Edge means the geographical distribution/ outside limit of an area. Edge computing is a computing power that’s done at near the source. Instead of relying on cloud space at one, we can do our computing in dozen of data centers that is near you.

--> That mean edge computing is a cloud that is coming to near you.

Edge in AI: -

Originally edge is the layer which was meant by handle local compute, storage and processing capability which is can not be handled by the cloud. It reduces latency involved in round trip to the cloud.

Edge AI means that AI algorithms will be processed locally on a hardware device. The algorithms are using data (sensor data or signals) that are created on the device.

A device using Edge AI does not need to be connected in order to work properly, it can process data and take decisions independently without a connection.

In order to use Edge AI, you need a device comprising a microprocessor and sensors.

Edge Computing helps to AI

The Edge AI application generates results and stores the results locally on the device. After working hours the power tool connects to the internet and sends the data to the cloud for storage and further processing.

Intelligence on the edge means that even the smallest devices and machines around us are able to sense their environment, learn from it and react on it.

This allows for instance the machines in some factory to take higher level decisions, act autonomously and to feedback important flaws or improvements to the user or the cloud.

Let suppose there is an example of self driving car where we need to send data realtime because we have lot of sensors and lot of devices connected to the car and those need to work.

for that if we will be rely on cloud computing then it may happen our processing may get slow. and our computation may affect due to latency.

To overcome that problem we have edge computing that is near to our device. and we will do all the process in our edge computing and later we will send all the data to the cloud computing afetr the working hour.

AI helps to Edge

As we know in machine learning and deep learning we need the power of GPU and it is very much important to do all the processing. When compared to other cloud infrastructure edge computing has limited resource and computing power , when deep learning models are deployed at the edge they don’t get same horse power as the public cloud which may slow down inferencing.

To bridge the gap between data center and edge, some chip companies are building accelerators that significantly speed up model inferencing. While chips are not comparable so GPUs running in the cloud they do accelerate the inferencing process.

Three AI accelerators are NVIDIA Jetson, Intel Movidius and Myriad Chips ,finally google edge tensor.

And the combination of hardware accelerators and software platforms is becoming important to run the models for inferencing

By accelerating AI inferencing, the edge will become an even more valuable tool and change the ML pipeline as we know it.

Why is Edge AI important?

Edge AI will do those tasks in milliseconds while others will take really good amount of time like data creation, decision and other tasks like Real time operations as self-driving cars, robots and many other areas.

Edge AI will reduce costs for data communication, because less data will be transmitted.

By processing data locally, you can avoid the problem with streaming and a lot of data to the cloud that makes you vulnerable from a privacy.

Why run AI algorithms on Edge?

Cloud based architecture, where inference happens on the cloud.

Step 1: Request with input image

When we are doing some image processing task or any other task where we need to send the data to server to do some operation or any realtime task. in that case latency is very much important if our image pixel will be higher and size of data will be much higher then it will be tough to do all processing.

in that case we can decrease the pixel and size of the image and we can send it to the server and we can do our task.

but the accuracy may affect because of quality of data.

so what we can do we can process the high pixel images to our edge computing and later on we can send our data and output to the cloud for further processing and storage.

We can see its really helping a lot in image processing.

Step 2: Run inference on cloud

This is another method where we can use the cloud we can take the example of amazon ec2. we can call amazon ec2 as edge computing. whenever we are creating the ec2 instance before that we need to select the region that will be near to your device or nearer to your location. for that we can do our operations easily and in a fast manner.

Implementation in Edge-AI

Vision recognition with deep learning

Motion detection application for security camera

Wearable healthcare devices

Autonomous vehicles

Smart homes IoT devices

Digital Image processing devices

Lane traffic congestion tracking

Air quality ,water quality & leakages, structure monitoring

Logistics & containers tracking

Sensor based data collection of farm equipment

Conclusion in my point of view

In my point of view edge computing will not be wise if we use in everywhere.

We need to check our requirement whether if in our case tolerant and latency does not matter then we should go for cloud computing.

If our application need latency like real-time data pushing and integration then we should go for edge computing.

Check out for more blogs: -

CoE-Ai Blog|blog.coeaibbsr.in

.png)

Comments