Machine Learning in Android with AI Tool

- Susantini Behera

- Aug 22, 2020

- 11 min read

Machine Learning can be integrated in Android Apps using an AI Tool i.e. ML kit Tool from Firebase.

What is ML Toolkit?

It is a Mobile SDK (Software Development Kit)that brings the power of Google Machine Learning to our apps for both Android and iOS.

Why there is a need of ML Kit ?

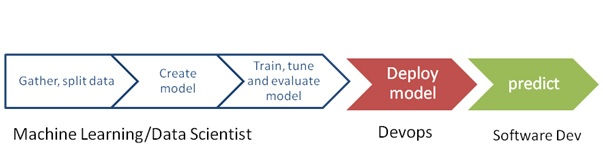

In General Process,implementation of Machine Learning goes through 3 stage

Pre-processing for Machine Learning-Firstly,a Machine Learning or Data Scientist needs to gather data from a business problem .After that data cleaning is done and needs to split the data into train and test data .The next process includes creating the model to train and then evaluation of model is done.

Deployment-Deployment of the ML Model in the remote database or server is being done by Devops Engineer.

Prediction-After the deployment is being done ,software developer will do the prediction and analysis of the model .

Figure 1:General Process for Machine Learning Implementation

ML Kit simplifies Machine Learning.Using this AI tool,only 2 stages are to be followed i.e.

Selection of Model-This is to be done by Business Analyst or Product Owner. As per the requirement , like face detection ,text recognition or any other application areas,Base API is chosen by him.

Prediction -After the selection of API ,directly prediction is done by software developer and various performance metrics are analysed to find out more accurate models.

Figure 2:Machine Learning using ML Kit

what are the major application areas using ML Tool kit?

some Base API's are available for which Machine Learning can directly be implemented without any training which is of 2 types available:

Vision API - Video and Image analysis APIs are used to label images and detect barcodes, text, faces, and objects.Basically 6 types are available including Barcode Scanning, Face Detection,Text Recognition, Image Labelling, Landmark Recognition and Digital Ink Recognition.

Natural Language Processing API-These API's are used to identify and translate between 58 languages and provide reply suggestions.There are 3 types of NLP API's available which are Smart Reply, Language Translation and Language ID recognition.

All of these features can be used both on-device and in the cloud.

•On-device: fast, works even without internet and free of cost.

• Cloud: More accurate with the power of Machine Learning in GCP with free quota of 1,000 requests / feature / month.

Pre-Requisites for ML project in Android

•For Cloud computing: It needs the Blaze plan, which can be changed in the Firebase Console because the Cloud Vision API must be used behind the scenes and the Cloud Vision API service linked to this link must be enabled first. https://console.cloud.google.com/apis/library/vision.googleapis.com/.

Add firebase to android project from the Tools option in the Android Studio or from Firebase after login.

In your project-level build.gradle file, include Google's Maven repository in both your buildscript and all projects sections.

Add the dependencies for the ML Kit Android libraries to module (app-level) Gradle file (usually app/build.gradle)

sync the gradle.

Project Implementation

Face Detection-Face Detection is to identify the face of a person, which can be identified by multiple faces in one image, on-device. It can specify the coordinates of the ears, eyes, cheeks, mouth, nose, and can also detect whether the face is smiling or not. Open eyes or close eyes can be processed in real-time, so we can use features to identify expressions in the image or video.Before starting to detect faces, if we want to change the basic settings of the model, we can find the config in the table below.

the xml file for on cloud option named activity_cloud.xml

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:padding="@dimen/margin_default"

tools:context=".TextActivity">

<ScrollView

android:layout_width="match_parent"

android:layout_height="match_parent"

android:layout_above="@+id/section_bottom">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical"

tools:ignore="UseCompoundDrawables">

<ImageView

android:id="@+id/image_view"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:adjustViewBounds="true"

android:contentDescription="@string/app_name"

android:src="@drawable/no_image" />

<TextView

android:id="@+id/text_view"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:textAppearance="@style/TextAppearance.AppCompat.Medium"

android:textStyle="bold"

android:layout_marginTop="@dimen/margin_default"/>

</LinearLayout>

</ScrollView>

<LinearLayout

android:id="@+id/section_bottom"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_alignParentBottom="true"

android:orientation="horizontal">

<Button

android:id="@+id/btn_device"

style="@style/CustomButton"

android:text="@string/btn_device" />

<Button

android:id="@+id/btn_cloud"

style="@style/CustomButton"

android:text="@string/btn_cloud" />

</LinearLayout>

</RelativeLayout>the xml file for on device option named activity_device.xml

<?xml version="1.0" encoding="utf-8"?>

<ScrollView xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:padding="@dimen/margin_default">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical"

tools:ignore="UseCompoundDrawables">

<ImageView

android:id="@+id/image_view"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:adjustViewBounds="true"

android:contentDescription="@string/app_name"

android:src="@drawable/no_image" />

<TextView

android:id="@+id/text_view"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_marginTop="@dimen/margin_default"

android:textAppearance="@style/TextAppearance.AppCompat.Medium"

android:textStyle="bold" />

</LinearLayout>

</ScrollView>the java file for whole applications named MainActivity.java

package com.example.mlkit;

import android.content.Intent;

import android.os.Bundle;

import androidx.appcompat.app.AppCompatActivity;

import android.view.View;

import android.widget.AdapterView;

import android.widget.ArrayAdapter;

import android.widget.ListView;

public class MainActivity extends AppCompatActivity implements AdapterView.OnItemClickListener {

private String[] classNames;

private static final Class<?>[] CLASSES = new Class<?>[]{

TextActivity.class,

BarcodeActivity.class,

FaceActivity.class,

ImageActivity.class,

LandmarkActivity.class,

CustomActivity.class,

LanguageActivity.class,

SmartReplyActivity.class,

TranslateActivity.class,

AutoMLActivity.class

};

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

classNames = getResources().getStringArray(R.array.class_name);

ListView listView = findViewById(R.id.list_view);

ArrayAdapter<String> adapter = new ArrayAdapter<>(this, android.R.layout.simple_list_item_1, classNames);

listView.setAdapter(adapter);

listView.setOnItemClickListener(this);

}

@Override

public void onItemClick(AdapterView<?> adapterView, View view, int position, long id) {

Intent intent = new Intent(this, CLASSES[position]);

intent.putExtra(BaseActivity.ACTION_BAR_TITLE, classNames[position]);

startActivity(intent);

}

}

the corresponding activity_main.xml file is as follows:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

tools:context=".MainActivity">

<ListView

android:id="@+id/list_view"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:scrollbars="none"/>

</LinearLayout>The java file responsible for FaceActivity.java is as follows:

package com.example.mlkit;

import android.content.Intent;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import androidx.annotation.NonNull;

import android.widget.ImageView;

import android.widget.TextView;

import com.example.mlkit.helpers.MyHelper;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.face.FirebaseVisionFace;

import com.google.firebase.ml.vision.face.FirebaseVisionFaceDetector;

import com.google.firebase.ml.vision.face.FirebaseVisionFaceDetectorOptions;

import java.util.List;

public class FaceActivity extends BaseActivity {

private ImageView mImageView;

private TextView mTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_device);

mImageView = findViewById(R.id.image_view);

mTextView = findViewById(R.id.text_view);

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

Bitmap bitmap;

if (resultCode == RESULT_OK) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

case RC_STORAGE_PERMS2:

checkStoragePermission(requestCode);

break;

case RC_SELECT_PICTURE:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

bitmap = MyHelper.resizeImage(imageFile, this, dataUri, mImageView);

} else {

bitmap = MyHelper.resizeImage(imageFile, path, mImageView);

}

if (bitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(bitmap);

detectFaces(bitmap);

}

break;

case RC_TAKE_PICTURE:

bitmap = MyHelper.resizeImage(imageFile, imageFile.getPath(), mImageView);

if (bitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(bitmap);

detectFaces(bitmap);

}

break;

}

}

}

private void detectFaces(Bitmap bitmap) {

FirebaseVisionFaceDetectorOptions options = new FirebaseVisionFaceDetectorOptions.Builder()

.setPerformanceMode(FirebaseVisionFaceDetectorOptions.ACCURATE)

.setLandmarkMode(FirebaseVisionFaceDetectorOptions.ALL_LANDMARKS)

.setClassificationMode(FirebaseVisionFaceDetectorOptions.ALL_CLASSIFICATIONS)

.build();

FirebaseVisionFaceDetector detector = FirebaseVision.getInstance().getVisionFaceDetector(options);

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(bitmap);

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionFace>>() {

@Override

public void onSuccess(List<FirebaseVisionFace> faces) {

mTextView.setText(getInfoFromFaces(faces));

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

mTextView.setText(R.string.error_detect);

}

});

}

private String getInfoFromFaces(List<FirebaseVisionFace> faces) {

StringBuilder result = new StringBuilder();

float smileProb = 0;

float leftEyeOpenProb = 0;

float rightEyeOpenProb = 0;

for (FirebaseVisionFace face : faces) {

// If landmark detection was enabled (mouth, ears, eyes, cheeks, and nose available):

// If classification was enabled:

if (face.getSmilingProbability() != FirebaseVisionFace.UNCOMPUTED_PROBABILITY) {

smileProb = face.getSmilingProbability();

}

if (face.getLeftEyeOpenProbability() != FirebaseVisionFace.UNCOMPUTED_PROBABILITY) {

leftEyeOpenProb = face.getLeftEyeOpenProbability();

}

if (face.getRightEyeOpenProbability() != FirebaseVisionFace.UNCOMPUTED_PROBABILITY) {

rightEyeOpenProb = face.getRightEyeOpenProbability();

}

result.append("Smile: ");

if (smileProb > 0.5) {

result.append("Yes");

} else {

result.append("No");

}

result.append("\nLeft eye: ");

if (leftEyeOpenProb > 0.5) {

result.append("Open");

} else {

result.append("Close");

}

result.append("\nRight eye: ");

if (rightEyeOpenProb > 0.5) {

result.append("Open");

} else {

result.append("Close");

}

result.append("\n\n");

}

return result.toString();

}

}Text Recognition -This feature is used to extract text from an image.This feature help users more comfortable, such as Filling in the ID card or filling in the lottery number to check.They can also be used to automate data-entry tasks such as processing credit cards, receipts, and business cards.It can be developed on both On-device and Cloud,will differ in that on-device can only engrave Latin characters, which is English, but on the Cloud you will be able to unwrap many languages Including foreign language and special characters too.

MyHelper folder is to be created in java class and MyHelper.java file is required for all other functionalities to work:

package com.example.mlkit.helpers;

import android.app.Activity;

import android.app.Dialog;

import android.content.Context;

import android.content.DialogInterface;

import android.content.Intent;

import android.database.Cursor;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.graphics.Matrix;

import android.media.ExifInterface;

import android.net.Uri;

import android.os.Environment;

import android.provider.MediaStore;

import android.provider.Settings;

import android.widget.ImageView;

import android.widget.LinearLayout;

import android.widget.ProgressBar;

import androidx.appcompat.app.AlertDialog;

import com.example.mlkit.R;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import static android.graphics.BitmapFactory.decodeFile;

import static android.graphics.BitmapFactory.decodeStream;

public class MyHelper {

private static Dialog mDialog;

public static String getPath(Context context, Uri uri) {

String path = "";

String[] projection = {MediaStore.Images.Media.DATA};

Cursor cursor = context.getContentResolver().query(uri, projection, null, null, null);

int column_index;

if (cursor != null) {

column_index = cursor.getColumnIndexOrThrow(MediaStore.Images.Media.DATA);

cursor.moveToFirst();

path = cursor.getString(column_index);

cursor.close();

}

return path;

}

public static File createTempFile(File file) {

File dir = new File(Environment.getExternalStorageDirectory().getPath() + "/com.example.mlkit");

if (!dir.exists() || !dir.isDirectory()) {

//noinspection ResultOfMethodCallIgnored

dir.mkdirs();

}

if (file == null) {

file = new File(dir, "original.jpg");

}

return file;

}

public static void showDialog(Context context) {

mDialog = new Dialog(context, R.style.NewDialog);

mDialog.addContentView(

new ProgressBar(context),

new LinearLayout.LayoutParams(LinearLayout.LayoutParams.WRAP_CONTENT, LinearLayout.LayoutParams.WRAP_CONTENT)

);

mDialog.setCancelable(false);

if (!mDialog.isShowing()) {

mDialog.show();

}

}

public static void dismissDialog() {

if (mDialog != null && mDialog.isShowing()) {

mDialog.dismiss();

}

}

public static void needPermission(final Activity activity, final int requestCode, int msg) {

AlertDialog.Builder alert = new AlertDialog.Builder(activity);

alert.setMessage(msg);

alert.setPositiveButton(android.R.string.ok, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

dialogInterface.dismiss();

Intent intent = new Intent(Settings.ACTION_APPLICATION_DETAILS_SETTINGS);

intent.setData(Uri.parse("package:" + activity.getPackageName()));

activity.startActivityForResult(intent, requestCode);

}

});

alert.setNegativeButton(android.R.string.cancel, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

dialogInterface.dismiss();

}

});

alert.setCancelable(false);

alert.show();

}

public static Bitmap resizeImage(File imageFile, Context context, Uri uri, ImageView view) {

BitmapFactory.Options options = new BitmapFactory.Options();

try {

decodeStream(context.getContentResolver().openInputStream(uri), null, options);

int photoW = options.outWidth;

int photoH = options.outHeight;

options.inSampleSize = Math.min(photoW / view.getWidth(), photoH / view.getHeight());

return compressImage(imageFile, BitmapFactory.decodeStream(context.getContentResolver().openInputStream(uri), null, options));

} catch (FileNotFoundException e) {

e.printStackTrace();

return null;

}

}

public static Bitmap resizeImage(File imageFile, String path, ImageView view) {

ExifInterface exif = null;

try {

exif = new ExifInterface(path);

int rotation = exif.getAttributeInt(ExifInterface.TAG_ORIENTATION, ExifInterface.ORIENTATION_NORMAL);

int rotationInDegrees = exifToDegrees(rotation);

BitmapFactory.Options options = new BitmapFactory.Options();

options.inJustDecodeBounds = true;

decodeFile(path, options);

int photoW = options.outWidth;

int photoH = options.outHeight;

options.inJustDecodeBounds = false;

options.inSampleSize = Math.min(photoW / view.getWidth(), photoH / view.getHeight());

Bitmap bitmap = BitmapFactory.decodeFile(path, options);

bitmap = rotateImage(bitmap, rotationInDegrees);

return compressImage(imageFile, bitmap);

} catch (IOException e) {

e.printStackTrace();

return null;

}

}

private static Bitmap compressImage(File imageFile, Bitmap bmp) {

try {

FileOutputStream fos = new FileOutputStream(imageFile);

bmp.compress(Bitmap.CompressFormat.JPEG, 80, fos);

fos.close();

} catch (IOException e) {

e.printStackTrace();

}

return bmp;

}

private static Bitmap rotateImage(Bitmap src, float degree) {

Matrix matrix = new Matrix();

matrix.postRotate(degree);

return Bitmap.createBitmap(src, 0, 0, src.getWidth(), src.getHeight(), matrix, true);

}

private static int exifToDegrees(int exifOrientation) {

if (exifOrientation == ExifInterface.ORIENTATION_ROTATE_90) { return 90; }

else if (exifOrientation == ExifInterface.ORIENTATION_ROTATE_180) { return 180; }

else if (exifOrientation == ExifInterface.ORIENTATION_ROTATE_270) { return 270; }

return 0;

}

}The java file for TextActivity.java as given below:

package com.example.mlkit;

import android.content.Intent;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import android.view.View;

import android.widget.ImageView;

import android.widget.TextView;

import androidx.annotation.NonNull;

import com.example.mlkit.helpers.MyHelper;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.cloud.FirebaseVisionCloudDetectorOptions;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.text.FirebaseVisionCloudTextRecognizerOptions;

import com.google.firebase.ml.vision.text.FirebaseVisionText;

import com.google.firebase.ml.vision.text.FirebaseVisionTextRecognizer;

import java.util.Arrays;

public class TextActivity extends BaseActivity implements View.OnClickListener {

private Bitmap mBitmap;

private ImageView mImageView;

private TextView mTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_cloud);

mTextView = findViewById(R.id.text_view);

mImageView = findViewById(R.id.image_view);

findViewById(R.id.btn_device).setOnClickListener(this);

findViewById(R.id.btn_cloud).setOnClickListener(this);

}

@Override

public void onClick(View view) {

switch (view.getId()) {

case R.id.btn_device:

if (mBitmap != null) {

runTextRecognition();

}

break;

case R.id.btn_cloud:

if (mBitmap != null) {

runCloudTextRecognition();

}

break;

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

case RC_STORAGE_PERMS2:

checkStoragePermission(requestCode);

break;

case RC_SELECT_PICTURE:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

mBitmap = MyHelper.resizeImage(imageFile, this, dataUri, mImageView);

} else {

mBitmap = MyHelper.resizeImage(imageFile, path, mImageView);

}

if (mBitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(mBitmap);

}

break;

case RC_TAKE_PICTURE:

mBitmap = MyHelper.resizeImage(imageFile, imageFile.getPath(), mImageView);

if (mBitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(mBitmap);

}

break;

}

}

}

private void runTextRecognition() {

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);

FirebaseVisionTextRecognizer detector = FirebaseVision.getInstance().getOnDeviceTextRecognizer();

detector.processImage(image).addOnSuccessListener(new OnSuccessListener<FirebaseVisionText>() {

@Override

public void onSuccess(FirebaseVisionText texts) {

processTextRecognitionResult(texts);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

e.printStackTrace();

}

});

}

private void runCloudTextRecognition() {

MyHelper.showDialog(this);

FirebaseVisionCloudTextRecognizerOptions options = new FirebaseVisionCloudTextRecognizerOptions.Builder()

.setLanguageHints(Arrays.asList("en", "hi"))

.setModelType(FirebaseVisionCloudDetectorOptions.LATEST_MODEL)

.build();

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);

FirebaseVisionTextRecognizer detector = FirebaseVision.getInstance().getCloudTextRecognizer(options);

detector.processImage(image).addOnSuccessListener(new OnSuccessListener<FirebaseVisionText>() {

@Override

public void onSuccess(FirebaseVisionText texts) {

MyHelper.dismissDialog();

processTextRecognitionResult(texts);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

MyHelper.dismissDialog();

e.printStackTrace();

}

});

}

private void processTextRecognitionResult(FirebaseVisionText firebaseVisionText) {

mTextView.setText(null);

if (firebaseVisionText.getTextBlocks().size() == 0) {

mTextView.setText(R.string.error_not_found);

return;

}

for (FirebaseVisionText.TextBlock block : firebaseVisionText.getTextBlocks()) {

mTextView.append(block.getText());

//In case you want to extract each line

/*

for (FirebaseVisionText.Line line: block.getLines()) {

for (FirebaseVisionText.Element element: line.getElements()) {

mTextView.append(element.getText() + " ");

}

}

}

}

}

Barcode Scanning-Barcode scanning is to read on-device encoded data according to various barcode format standards, regardless of the image orientation, it can be read, including QR code.It supports Linear formats such as Codabar, Code 39, Code 93, Code 128, EAN-8, EAN-13, ITF, UPC-A, UPC-E and 2D formats like Aztec, Data Matrix, PDF417, QR Code.In the case of 2D formats, many data formats are supported, such as URLs, contact information, calendar events, email, phone numbers, SMS message prompts, geographic location, etc.

package com.example.mlkit;

import android.content.Intent;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import android.widget.ImageView;

import android.widget.TextView;

import androidx.annotation.NonNull;

import com.example.mlkit.helpers.MyHelper;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.barcode.FirebaseVisionBarcode;

import com.google.firebase.ml.vision.barcode.FirebaseVisionBarcodeDetector;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import java.util.List;

public class BarcodeActivity extends BaseActivity {

private ImageView mImageView;

private TextView mTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_device);

mImageView = findViewById(R.id.image_view);

mTextView = findViewById(R.id.text_view);

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

Bitmap bitmap;

if (resultCode == RESULT_OK) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

case RC_STORAGE_PERMS2:

checkStoragePermission(requestCode);

break;

case RC_SELECT_PICTURE:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

bitmap = MyHelper.resizeImage(imageFile, this, dataUri, mImageView);

} else {

bitmap = MyHelper.resizeImage(imageFile, path, mImageView);

}

if (bitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(bitmap);

barcodeDetector(bitmap);

}

break;

case RC_TAKE_PICTURE:

bitmap = MyHelper.resizeImage(imageFile, imageFile.getPath(), mImageView);

if (bitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(bitmap);

barcodeDetector(bitmap);

}

break;

}

}

}

private void barcodeDetector(Bitmap bitmap) {

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(bitmap);

/*

FirebaseVisionBarcodeDetectorOptions options = new FirebaseVisionBarcodeDetectorOptions.Builder()

.setBarcodeFormats(FirebaseVisionBarcode.FORMAT_QR_CODE, FirebaseVisionBarcode.FORMAT_AZTEC)

.build();

*/

FirebaseVisionBarcodeDetector detector = FirebaseVision.getInstance().getVisionBarcodeDetector();

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionBarcode>>() {

@Override

public void onSuccess(List<FirebaseVisionBarcode> firebaseVisionBarcodes) {

mTextView.setText(getInfoFromBarcode(firebaseVisionBarcodes));

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

mTextView.setText(R.string.error_detect);

}

});

}

private String getInfoFromBarcode(List<FirebaseVisionBarcode> barcodes) {

StringBuilder result = new StringBuilder();

for (FirebaseVisionBarcode barcode : barcodes) {

//int valueType = barcode.getValueType();

result.append(barcode.getRawValue() + "\n");

/*

int valueType = barcode.getValueType();

switch (valueType) {

case FirebaseVisionBarcode.TYPE_WIFI:

String ssid = barcode.getWifi().getSsid();

String password = barcode.getWifi().getPassword();

int type = barcode.getWifi().getEncryptionType();

break;

case FirebaseVisionBarcode.TYPE_URL:

String title = barcode.getUrl().getTitle();

String url = barcode.getUrl().getUrl();

break;

}

*/

}

if ("".equals(result.toString())) {

return getString(R.string.error_detect);

} else {

return result.toString();

}

}

}Image Labeling-Image Labeling is the finding of content from images such as people, animals, things, places, events and more. It can be performed on both On-device and in the Cloud, the difference is in the number of labels, if it is on-device, there will be 400 types of base labels, but if it is in the Cloud, there will be more than 10,000 labels, so the resolution in Cloud label match is therefore more specific. It provides high- accuracy classification with Low latency and no network required.

The java file named ImageActivity.java is described below:

package com.example.mlkit;

import android.content.Intent;

import android.graphics.Bitmap;

import android.net.Uri;

import android.os.Bundle;

import androidx.annotation.NonNull;

import android.view.View;

import android.widget.ImageView;

import android.widget.TextView;

import com.example.mlkit.helpers.MyHelper;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.label.FirebaseVisionCloudImageLabelerOptions;

import com.google.firebase.ml.vision.label.FirebaseVisionImageLabel;

import com.google.firebase.ml.vision.label.FirebaseVisionImageLabeler;

import com.google.firebase.ml.vision.label.FirebaseVisionOnDeviceImageLabelerOptions;

import java.util.List;

public class ImageActivity extends BaseActivity implements View.OnClickListener {

private Bitmap mBitmap;

private ImageView mImageView;

private TextView mTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_cloud);

mTextView = findViewById(R.id.text_view);

mImageView = findViewById(R.id.image_view);

findViewById(R.id.btn_device).setOnClickListener(this);

findViewById(R.id.btn_cloud).setOnClickListener(this);

}

@Override

public void onClick(View view) {

mTextView.setText(null);

switch (view.getId()) {

case R.id.btn_device:

if (mBitmap != null) {

FirebaseVisionOnDeviceImageLabelerOptions options = new FirebaseVisionOnDeviceImageLabelerOptions.Builder()

.setConfidenceThreshold(0.7f)

.build();

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);

FirebaseVisionImageLabeler detector = FirebaseVision.getInstance().getOnDeviceImageLabeler(options);

detector.processImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionImageLabel>>() {

@Override

public void onSuccess(List<FirebaseVisionImageLabel> labels) {

extractLabel(labels);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

mTextView.setText(e.getMessage());

}

});

}

break;

case R.id.btn_cloud:

if (mBitmap != null) {

MyHelper.showDialog(this);

FirebaseVisionCloudImageLabelerOptions options = new FirebaseVisionCloudImageLabelerOptions.Builder().setConfidenceThreshold(0.7f).build();

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(mBitmap);

FirebaseVisionImageLabeler detector = FirebaseVision.getInstance().getCloudImageLabeler(options);

detector.processImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionImageLabel>>() {

@Override

public void onSuccess(List<FirebaseVisionImageLabel> labels) {

MyHelper.dismissDialog();

extractLabel(labels);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

MyHelper.dismissDialog();

mTextView.setText(e.getMessage());

}

});

}

break;

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case RC_STORAGE_PERMS1:

case RC_STORAGE_PERMS2:

checkStoragePermission(requestCode);

break;

case RC_SELECT_PICTURE:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

mBitmap = MyHelper.resizeImage(imageFile, this, dataUri, mImageView);

} else {

mBitmap = MyHelper.resizeImage(imageFile, path, mImageView);

}

if (mBitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(mBitmap);

}

break;

case RC_TAKE_PICTURE:

mBitmap = MyHelper.resizeImage(imageFile, imageFile.getPath(), mImageView);

if (mBitmap != null) {

mTextView.setText(null);

mImageView.setImageBitmap(mBitmap);

}

break;

}

}

}

private void extractLabel(List<FirebaseVisionImageLabel> labels) {

for (FirebaseVisionImageLabel label : labels) {

mTextView.append(label.getText() + "\n");

mTextView.append(label.getConfidence() + "\n\n");

}

}

}Language Translation -With ML Kit's on-device Translation API, you can dynamically translate text between more than 50 languages. Powered by the same models used by the Google Translate app's offline mode. Keep on-device storage requirements low by dynamically downloading and managing language packs.Translations are performed quickly, and don't require you to send users' text to a remote server.

Smart Replies-The Smart Reply model generates reply suggestions based on the full context of a conversation, not just a single message. The on-device model generates replies quickly and doesn't require you to send users' messages to a remote server.Smart Reply is intended for casual conversations in consumer apps. Reply suggestions might not be appropriate for other contexts or audiences.Currently, only English is supported. The model automatically identifies the language being used and only provides suggestions when it's English.

Brought to you by-

CoE-AI(CET-BBSR)-An initiative by CET-BBSR,Tech Mahindra and BPUT to provide to solutions to Real world Problems through ML and IoT

Researched by:

Susantini Behera

(Research Intern)

.png)

Comments