Resume/ CV Parser (Summarization Using NLP)

- Khirod Behera

- May 22, 2020

- 2 min read

Parsing resume and to extract data from the resume is really a tough work for the recruiter or whoever want to extract some useful information from the text document here in this blog, Basically, we are going to more focus on the summarization of resume.

Resumes are a great example of unstructured data and it has its own style and format to preprocess data it will take a really good amount of time its obvious . and also this is really tough task to extract information from this type of document for a human.

so now our task is to build a model that will extract useful information automatically.

Automate resume parsing using Spacy and NLTK

Spacy is an opensource powerful python package in NLP for doing various NLP tasks. This library is published by Matthew Honnibal and Ines Monstani under MIT license.

So, what are the features of it?

Spacy is the one-stop for usual task in NLP project.

The features are: -

Tokenization

Lemmatization

Part-of-speech tagging

Entity recognition

Dependency parsing

Sentence recognition

Word-to-vector transformations

Many conventional methods for cleaning and normalizing text

Here i am going to use a pre-trained model en_core_web_sm

Before going to apply the model we need to preprocess the text.

Step-1:- Extract the document and the text.

In this step, we will understand the data because that resumes would not be in the same format it could be in pdf or Docx. and this can be the challenge to extract the texts from different resumes.

after extracting the documents we need to parse means to extract the texts from the document for that we are using pdfminer and doc2text library.

Step-2: -Apply the model.

After extracting the texts from documents we are going to apply the model

en_core_web_sm: -

This is the spacy's pre-trained model used for tokenization and for entity recognition.

Matcher:-

This is a spacy's model for matching with rule-based.

Step-3: -Apply the rule for matching string.

After applying the model now the task to find the required texts from the texts.

for that, we will apply some rules for different tasks.

Name: - As we all know the name is always a proper noun. so based on that we can build a rule so that it will find all the proper nouns from our dataset.

Phone number: -for the phone number as we know for Indian phone number all are in 10 digits numbers so we can build rule so that it will extract the phone number.

Email-id: -For Email-id we know every email id has the @ symbol in the middle of the text (i.e. " "+@" "+." ") we can add rules such as this.

Skills: - Till now it was an easy task to get the data because it has some exact matching rules but for skills, there are no rules so this is the really tough task because everyone has the different skillsets. so for this problem 1st, we will create a data set and we will start matching with the document, and with the regular expression we will find the result.

Education: -For education also we will apply the same because as above here is also no rules for education for this also we will create a dataset and will match with the text to get the education details of the candidates.

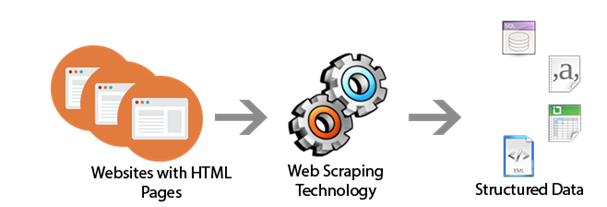

and in the final step, we can save it in a CSV or a database as our requirement.

.png)

Comments