Why care about structuring a deep learning project ?

- Sushree Barsa Pattnayak

- Jun 2, 2020

- 5 min read

Keywords- ML/DL strategy,Structuring Machine learning projects,Iterative process, Reproducibility crisis,versioning data,codebase separately,orthogonality,single number evaluation metric

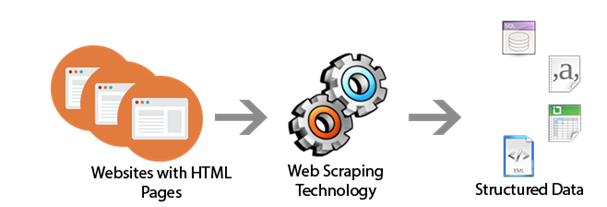

This blog consists the description about Structuring Deep learning /Machine learning projects. Here the hidden technical dept. in ML systems is well explained as well as what is iterative process ,various ways to improve the performance of DL projects,definition o of Reproducibility crisis,Versioning data and codebase separately ,why machine learning strategy is important,orthogonality,chain of assumption of ML ,single number evaluation metric etc....

For a complete solution of ML/DL a developer should know the process to find more specific way to get more accuracy.It depends on various approaches as follows...

Fig-1(Hidden technical dept. in ML)

This is probably central to all the software development projects around the globe. You are most likely to work in a team when it comes to developing a software project at a production level. The team will consist of numerous members with varied responsibilities - some of them will be back-end developers, some will be responsible for documentation while some will be responsible for unit testing. Things can get even worse if you are all alone - hired as a contractor to develop a PoC where you and only you are solely responsible for each and every little component of the PoC. The same theory applies to deep learning projects as well since at the end of the day we not only want to push the advancements in the field but also are interested in using deep learning to develop applications. When it comes to developing an application with deep learning at a decent scale it is nothing other than a software project.

Applied deep learning is an iterative process-

Fig-2 Applied deep learning is an iterative process

Source: Improving Deep Neural Networks: Hyper parameter tuning, Regularization and Optimization

Performance of the deep learning projects can be improved in various ways :

For example, you can collect more data if there is a dearth, you can train a network for a longer period of time, you can tune the hyper parameters of your deep learning model and so on. But when it comes to designing an entire system using deep learning, it is not uncommon to see these ideas getting failed. Even after improving the quality of the training data, your model might not work as expected may be the new training data is not well representative of the edge cases, may be there is still a significant amount of label noise in the training data; there can be many reasons literally. Now, this is perfectly fine but the amount of time put into collecting more data, labeling it, cleaning it gets wasted when this failure happens. We want this to be reduced as much as possible. Having a set of well-formed strategies will help here.

Reproducibility crisis-

This is very central to deep learning because neural networks are stochastic in nature. This is one of the reasons why they are so good approximating functions. Because of this inherent stochasticity of neural networks, it is absolutely possible to get different results on the same experiment. For example today, you may get an accuracy of 90% on the Cats vs. Dogs dateset with a network architecture and the next day you observe a ± 1% change in the accuracy with the same architecture and experimentation pipeline. This is undesirable in production systems. We would want to have consistency in the results and would like the experiments as reproducible as possible.

Think of another scenario when you have conveyed your experiment results to your team. But when the other members are trying to reproduce your experiments for further development in the project, they are unable to do so. What is direr is even fixing the seeds of random number generators does not always guarantee reproducibility. There can be variations in the results due to dependency versions, environment settings, hardware configurations and so on. For example, you might have run all your experiments using TensorFlow 1.13 but the other members of the team are using the latest release (2.0.0-beta). This can cause loads of problems unnecessarily in the execution of the experiments. In worst cases, the entire codebase can break for this dependency mismatch. Another example would be, say you have done all your experiments in GPU enabled environment without using any threading mechanism to load in the data. But this environment may not always be the same - your teammates may not have GPU enabled systems. As a result, they may get different results. So, apart from the fixation of the random seeds, it is important to maintain a fixed and consistent development environment across the team.

Versioning data and codebase separately -

Software codebases are large and multi-variant. It is very likely that the software project you are working on will have multiple releases like most of the software do. So, quite naturally the codebases for these version changes also vary from each other and this gives birth to the need for version control. Versioning of codebases is probably way more important than one can imagine. If the developers' team does not maintain these different versions of codebases effectively, you will never be able to pull the changes easily in the current version of the software or rollback to the previously working release.

When it comes to deep learning, we not only have different codebases to version but also may have different versions of training data. You might have started your experiments with a training set containing several images and as the project progressed, you and your team decided to add more images to the training set. This is an example where data versioning is required.

What is machine learning strategy ? -

For example- Let’s say you are working on your cat cost file .And after working it for some time you’ve gotten your system to have 90% accuracy, but this isn’t good enough for your application, you might think how to improve accuracy as following ideas-

Either Collecting more data, collecting more diverse training set, train algorithm longer with gradient descent ,trying Adam instead of gradient descent, try bigger network , try smaller network ,try drop-out , Add L2 regularization, or by changing network architecture(Activation functions, hidden units) etc.

When you will try to train your model for more accuracy and if you will choose the method more poorly then it is entirely possible that you may end up realizing that the method you have chosen didn’t do any good .So structuring machine learning strategy is important to know which strategy is the best fit to the algorithm.

Orthogonalization-

There are several methods including many hyper parameters that can be tuned. The process of having cleared eye about what to tune in order to try to achieve one effect is called Orthogonalization.

Chain of assumption in ML-

Fig 3-(Structure of chain of assumption of ML )

Single number evaluation metric-

There are may be two classifiers between them. One classifier will be better or superior and if you are testing a lot of different classifiers, then its just difficult to look at all these numbers and quickly pick one. So, to track your performance reasonable single real number evaluation metric is best to keep on iterating form.The idea of building structuring machine learning projects works on a constructive feedback principle. You build a model, get feedback from metrics, make improvements and continue until you achieve a desirable accuracy. Evaluation metrics explain the performance of a model. An important aspect of evaluation metrics is their capability to discriminate among model results.

.png)

Comments